Features new Intel Core i7 processor with the power to take on the most demanding digital duties today – and tomorrow

The Studio XPS desktop configured with an optional TV tuner is an ideal choice as the center of a home entertainment system:

Support for up to 1TB of hard drive space to store music libraries, favorite movies and TV shows.

Optional Blu-ray DiscTM drive can alleviate the need to purchase, make room for and set up a separate Blu-ray player.

The Studio XPS desktop features 64-bit versions of Microsoft® Windows Vista® so it can take advantage of up to 12GB tri-channel DDR3 memory, which can improve performance in multitasking and memory-intensive applications like photo and video editing software.

Robot Touchscreen Analysis from MOTO Development Group on Vimeo.

A touchscreen is a touchscreen, right? Hardly! As MOTO pointed out in our recent Do-It-Yourself Touchscreen Analysispost, “All touchscreens are not created equal.”

With our simple test technique — which basically consists of using a basic drawing application and a finger to slowly trace straight lines on the screen of each device — it’s easy to see the difference in touchscreen resolution from one phone to the next. Results with straight lines indicate a high degree of sensor accuracy; less-precise sensors show the lines with wavy patterns, stair-steps, or both.

After we published our first comparison of four touchscreen smartphones, a few critics found fault with our DIY testing technique. Many of of these comments centered around the idea that our human-finger methodology is prone to inconsistency, due to variables in finger pressure, line-straightness, or tracing speed.

Human Error?

Our response to these arguments is pretty simple: These are all fair points. Nevertheless, we’re confident that such inconsistencies do not distort the basic results of our touchscreen shootout. In other words, the inconsistencies are real, but they don’t make much difference.

Nevertheless, to satisfy the critics, we decided to give them exactly what they asked for: We wrote a script for MOTO’s laboratory robot and then re-ran the comparison to see how the touchscreens stack up when the lines are drawn by our robot’s slow and precise “finger.” (See the robot in action, in video below.)

Add Some New Contenders

Before running the robot test, we also decided to satisfy the many requests we received to add the Palm Pre and the Blackberry Storm 2 to the mix. How did the new phones perform? The Blackberry and the Palm touchscreens both performed fairly well. The iPhone still retains its crown as King of the smartphone touchscreens, with the Nexus One in a distant second. Take a look:

Understanding the Results

Touchscreen performance variation occurs because there is no out-of-the-box solution for manufacturers that hope to install multi-touch screens in consumer electronic devices.

To get it right, gadget-makers have to assemble a variety of critical elements — screen hardware, software algorithms, sensor tuning, and user-interface design, to name but a few — and then refine each component of the stack to deliver the best touchscreen experience possible. It’s a complex and laborious process that requires extremely close collaboration between multidisciplinary teams, as well as a high-level vision for a quality end-user experience.

Indeed, from a consumer perspective, what matters most isn’t the performance of the touchscreen itself, but how well a touchscreen performs in combination with its operating system and user-interface to deliver an experience that is satisfying overall.

Still, it’s useful to look at touchscreen performance in isolation, because it is a central ingredient in the mix and a good indicator of how satisfying a touchscreen experience is likely to be.

Watch the video for the full story. (Mobile viewers click here.)

Does the Drawing App Make a Difference?

Some readers who saw our last DIY Touchscreen Analysis post wondered what drawing applications we used, and if the drawing application could influence the results by either compensating for or distortng hardware performance.

Developers who create drawing apps sometimes add smoothing algorithms to make the input look more natural. However, the artifacts of these algorithms are fairly easy to identify with casual exploration. We chose drawing applications which we found not to do minimal (if any) smoothing of the input data.

In any case, smoothing is most effective only if you are moving quickly – with the snail-like pace of the test robot, you can see that the data, as captured, appears immediately on the screen and never changes to a “smoothed” version.

Of course you don’t have to take our word for it – try it yourself! Here are the apps we used:

- Blackberry Storm: Canvas

- iPhone: SimpleDraw

- Droid Eris, Droid: DrawNoteK

- Palm Pre: Super Paint

- Google Nexus One: SimplyDraw

Human v. Robot

Finally, as predicted, the lineup below shows how our simple finger-test correlates quite closely with the more formal results when we got when we used our ultra-precise, ultra-consistent robot in MOTO’s laboratories:

Indeed, notice that by and large, the results look even worse in the robot tests. That’s because the robot drew lines at only a quarter-inch per second — much more slowly than our ” DIY test.

And as we we’ve explained previously, low speed is crucial to testing the true performance of the screen, because tracing high speeds skips over the many data points captured at slow speed, causing lines to look straighter than they actually are. Because the robotic finger is somewhat less compliant than a human finger, it is a little harder to detect. This confuses poor screens even more than when humans attempt the test.

A Prediction

In the long run, however, we don’t expect this high degree of touchscreen variation between handset manufacturers to continue in such dramatic form.

Right now, capacitive touchscreens are a relatively new feature to appear in consumer electronics products. And as we’ve pointed out several times before, creating a seamless touchscreen experience is hard work that requires a high level of commitment to technology integration and interdisciplinary teamwork. Over time more brand-name manufacturers will acquire the expertise required to deliver excellent touchscreen products.

We know for a fact that the solutions in these phones (other than the iPhone) are all last-generation silicon and touch panel components – the other touch screen makers are hard at work perfecting their new solutions, and they may just leapfrog Apple in some areas when they arrive on the market over the next year.

Just consider the “door slam test” that’s often used to evaluate the build-quality of automobiles. Like touchscreen devices, cars are complex machines that require a high level of system integration. A decade ago, the difference in quality between established manufacturers like BMW or Mercedes and a relative newcomer like Hyundai was dramatic. A door-slam on the former felt solid and precise; the latter felt loose and tinny. Yet today Hyundai has closed the gap, and many of the company’s cars pass the door-slam test in world-class style.

In other words, practice can help make perfect. It’ll be interesting to re-run our touchscreen test a year or two from now to see how the playing field starts to even-up.

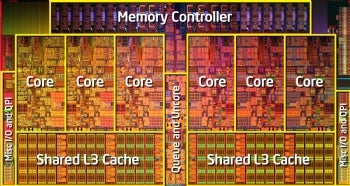

Intel has announced its latest Extreme Edition processor, the Core i7-980X. Like the recently released 2010 Clarkdale lineup, the i7-980X (previouslycode-named Gulftown) brings Intel's turbo boost and hyperthreading technologies to the 32nm process. The i7-980X is also Intel's first processor with six physical cores, offering increased system performance in applications optimized to take advantage of them.

The Core i7-980X will essentially replace Intel's current performance king, the 45nm Core i7-975 Extreme Edition. While the Core i7-975 will still be available, the new six-core processor will be offered at the same $999 price point--that's six cores for the price of four! But how much of a difference can two extra cores make?

At a glance, the Core i7-975 and the Core i7-980X are identical. Both sport a base clock speed of 3.33GHz, report a TDP rated at 130W, and support three channels of DDR3-1066 memory. But two additional cores means that the processor has 12 threads for an application to work with, versus four cores and 8 threads in the i7-975.

You'll also find a 12MB L3 cache shared across all six of those cores, as opposed to the 8MB cache in the i7-975. A processor's cache functions as a memory storage area, where frequently accessed data remains readily accessible. A larger L3 cache shared across all six cores allows data to be exchanged among them far more readily, improving performance in multithreaded applications. With a large cache and four extra virtual threads, you'd expect to find the greatest appreciable performance difference between the two chips in applications designed to take advantage of multiple cores--and our test results reflected as much.

You'll also find a 12MB L3 cache shared across all six of those cores, as opposed to the 8MB cache in the i7-975. A processor's cache functions as a memory storage area, where frequently accessed data remains readily accessible. A larger L3 cache shared across all six cores allows data to be exchanged among them far more readily, improving performance in multithreaded applications. With a large cache and four extra virtual threads, you'd expect to find the greatest appreciable performance difference between the two chips in applications designed to take advantage of multiple cores--and our test results reflected as much.

For our tests, Intel provided a pair of DX58SO motherboards. Serial upgraders should be pleased to note that the Core i7-980X is compatible with existing X58 chipsets. Just drop it into your existing motherboard, and you're (almost) ready to go; we had to perform a required, but painless, BIOS update. Our second test bed was equipped with the aforementioned Core i7-975 Extreme Edition processor. Both test beds also carried 6GB of RAM, 1TB hard drives, ATI Radeon HD 5870 graphics cards, and optical drives for loading software. We ran all of our tests on Windows 7 Ultimate Edition (64-bit).

Intel is pitching the Core i7-980X as the the premier part for the enthusiast gaming crowd. In our tests, we did see some improvements over the Core i7-975, but they were marginal. In Unreal Tournament 3 (1920-by-1200 resolution, high settings), the Core i7-980X cranked out 159.9 frames per second as compared to the Core i7-975's 155.4 fps, a 2.8 percent improvement. In Dirt 2, the Core i7-980X offered 73.3 fps, against the Core i7-975's 71.7 fps--a 2.2 percent increase.

Intel is pitching the Core i7-980X as the the premier part for the enthusiast gaming crowd. In our tests, we did see some improvements over the Core i7-975, but they were marginal. In Unreal Tournament 3 (1920-by-1200 resolution, high settings), the Core i7-980X cranked out 159.9 frames per second as compared to the Core i7-975's 155.4 fps, a 2.8 percent improvement. In Dirt 2, the Core i7-980X offered 73.3 fps, against the Core i7-975's 71.7 fps--a 2.2 percent increase.

Those results are hardly surprising. Despite the proliferation of multicore processors, many modern video games have yet to take full advantage of multithreading. Sega's recently released Napoleon: Total War and Ubisoft's upcoming R.U.S.E. have both touted their Core i7-980X optimization, claiming greater detail and realism thanks to simply having more physical cores to work with.

Other games boasting optimization for more than four processor threads include Ubisoft's Far Cry 2, Capcom's Resident Evil 5, and Activision's Ghostbusters. That being said, if you recently sprang for a Core i7-975 and are strictly a gamer, there's no need to curse your poor timing--at least, not until more developers fully commit to the multithreaded bandwagon.

If, on the other hand, you spend much of your time working with multithreaded applications--including Blender, Adobe Photoshop, and Sony Vegas Pro--coughing up $1000 for your workstation's processor might not necessarily be a bad idea.

The most tangible results will be apparent in applications designed to sprawl across as many cores as possible. Take Maxon's Cinema 4D, 3D animation software used by professionals in numerous industries. In Maxon's Cinebench CPU benchmark--which can utilize up to 64 processor threads--the six-core i7-980X saw a 40 percent improvement in performance over the quad-core i7-975.

When considering a processor with a 130W TDP, there's a good chance that saving a few bucks on your energy bill isn't your chief concern. Nevertheless, the Core i7-980X does offer perceptible gains over the i7-975. With all power-saving features disabled, power utilization at peak levels for the i7-980X was 210 watts, versus the i7-975's 231 watts. That's a 10 percent difference in what seems like the wrong direction, indicative of the potential power savings of the smaller 32nm process.

There's a lot to like here, but that's to be expected--this is a $1000 piece of silicon, after all. As far as gamers are concerned, the i7-980X may not blow the i7-975 out of the water currently, but in this case the performance bottleneck lies in the lack of available multithreaded offerings--a trend that's already begun to change. If this chip is in your price bracket, it's well worth the cost of entry provided that you haven't plunked down for an Extreme Edition processor too recently. And as multicore processors and multicore-optimized applications become increasingly common, you'll be able to put all six of those cores to good use--for work and play.

It’s official: Google has just announced Google Buzz, its newest push into the social media foray. This confirms earlier reports of Gmail integrating a social status feature.

On stage revealing the new product was Bradley Horowitz, Google’s vice president for product management. While introducing the product, Mr. Horowitz focused on the human penchant for sharing experiences and the social media phenomenon of wanting to share it in real time. These two key themes were core philosophies behind Google Buzz.

“It’s becoming harder and harder to find signal in the noise,” Bradley stated before introducing the product manager for Google Buzz, Todd Jackson.

Here are the details:

Google Buzz: The Details

- Mr. Jackson introduced “a new way to communicate within Gmail![]() .” It’s “an entire new world within Gmail.” Then he introduced the five key features that define Google Buzz:

.” It’s “an entire new world within Gmail.” Then he introduced the five key features that define Google Buzz:

- Key feature #1: Auto-following

- Key feature #2: Rich, fast sharing experience

- Key feature #3: Public and private sharing

- Key feature #4: Inbox integration

- Key feature #5: Just the good stuff

- Google then began the demo. Once you log into Gmail, you’ll be greeted wiht a splash page introducing Google Buzz.

- There is a tab right under the inbox, labeled “Buzz”

- It provides links to websites, content from around the web. Picasa![]() , Twitter

, Twitter![]() , Flickr

, Flickr![]() and other sites are aggregated.

and other sites are aggregated.

- It shows thumbnails when linked to photos from sites like Picasa and Flickr. Clicking on an image will blow up the images to almost the entire browser, making them easier to see.

- It uses the same keyboard shortcuts as Gmail. This makes sense. Hitting “R” allows you to comment/reply to a buzz post, for example.

- There are public and private settings for different posts. You can post updates to specific contact groups. This is a lot like Facebook![]() friend lists.

friend lists.

- Google wants to make sure you don’t miss comments, so it has a system to send you an e-mail letting you know about updates. However, the e-mail will actually show you the Buzz you’ve created and all of the comments and images associated with it.

- Comments update in real time.

- @replies are supported, just like Twitter. If you @reply someone, it will send a buzz toward an individual’s inbox.

- Google Buzz has a “recommended” feature that will show buzzes from people you don’t follow if your friends are sharing or commenting on that person’s buzz. You can remove it or change this in settings.

- Google is now speaking about using algorithms to help filter conversations, as well as mobile devices related to Buzz.

The Mobile Aspect

- Google buzz will be accessible via mobile in three ways: from Google Mobile’s website, from Buzz.Google.com (iPhone and Android![]() ), and from Google Mobile Maps.

), and from Google Mobile Maps.

- Buzz knows wher you are. It will figure out what building you are and ask you if it’s right.

- Buzz has voice recognition and posts it right onto your buzz in real-time. It also geotags your buzz posts.

- Place pages integrate Buzz.

- In the mobile interface, you can click “nearby” and see what people are saying nearby. NIFTY, if I say so myself.

- You can layer Google Maps![]() with Buzz. You can also associate pictures with buzz within Google Maps.

with Buzz. You can also associate pictures with buzz within Google Maps.

- Conversation bubbles will appear on your Google Maps. They are geotagged buzz posts, which lets you see what people are saying nearby.

The long-awaited depletion of the Internet's primary address space came one step closer to reality on Tuesday with the announcement that fewer than 10 per cent of IPv4 addresses remain unallocated.

The Number Resource Organization (NRO), the official representative of the five Regional Internet Registries, made the announcement. The Regional Internet Registries allocate blocks of IP addresses to ISPs and other network operators.

The NRO is urging Internet stakeholders — including corporations, government agencies, ISPs, IT vendors and users — to take immediate action and begin deploying the next-generation Internet Protocol known as IPv6, which has vastly more address space than today's IPv4.

"This is a key milestone in the growth and development of the global Internet," said Axel Pawlik, Chairman of the NRO, in a statement. "With less than 10 per cent of the entire IPv4 address range still available for allocation to RIRs, it is vital that the Internet community take considered and determined action to ensure the global adoption of IPv6."

IPv4 is the Internet's main communications protocol. IPv4 uses 32-bit addresses and can support around 4 billion IP addresses.

Designed as an upgrade to IPv4, IPv6 uses a 128-bit addressing scheme and can support so many billions of IP addresses that the number is too big for most non-techies to understand. (IPv6 supports 2 to the 128th power of IP addresses.)IPv6 has been available since the mid-1990s, but deployment of IPv6 began in earnest last year.

The NRO recommends IPv6 as a way of ensuring that the Internet can support billions of additional people and devices.The NRO recommends the following actions:

* Businesses should provide IPv6-capable services and platforms.

* Software and hardware vendors should sell products that support IPv6.

* Government agencies should provide IPv6-enabled content and services, encourage IPv6 deployment in their countries, and purchase IPv6-compliant hardware and software.

*Users should request IPv6 services from their ISPs and IT vendors.

NRO officials warned of "grave consequences in the very near future" if the Internet community fails to recognize the rapid depletion of IPv4 addresses.